Head-Worn Displays for Order Picking

Evaluating how display positioning and device characteristics affect efficiency, accuracy, and comfort in warehouse picking tasks through controlled user studies

Optimizing Head-Worn Displays for Industrial Tasks

Order picking in warehouses requires constant attention switching between digital instructions and physical tasks. Through two controlled studies, we evaluated how HWD positioning and device characteristics affect worker performance, revealing critical design principles for wearable technology in industrial environments.

The Problem

Industrial wearable deployment requires understanding human factors, not just technical capabilities

With over 750,000 warehouses globally supporting trillions in commerce, small efficiency gains in order picking yield massive operational benefits. While head-worn displays promise to enhance worker guidance, their effectiveness depends critically on display positioning, device ergonomics, and interaction design.

Display positioning affects cognitive load

Workers must switch attention between digital instructions and physical environment. Poor display placement can create visual occlusion, neck strain, and increased task completion time.

Device characteristics have complex trade-offs

Weight, field of view, frame rate, and form factor each impact usability differently. Understanding these trade-offs is crucial for successful deployment in demanding industrial environments.

What I Did

Realistic Environment Design

Built warehouse-scale picking environment with 7 shelving units, 525 books, and industry-standard spatial layouts for ecological validity

Controlled Experiments

Designed counterbalanced studies with TSP-optimized pick paths, standardized interfaces, and comprehensive performance metrics

Statistical Analysis

Applied repeated measures ANOVA, post-hoc testing, and preference ranking analysis to derive actionable design principles

Research Approach

Research Goals

How do head-worn display positioning and device characteristics affect efficiency, accuracy, and comfort in attention-switching industrial tasks like order picking?

- Which display positions in the visual field minimize cognitive overhead during mobile tasks?

- How do different HWD form factors (weight, FOV, frame rate) impact worker performance?

- What design principles can optimize HWDs for industrial deployment?

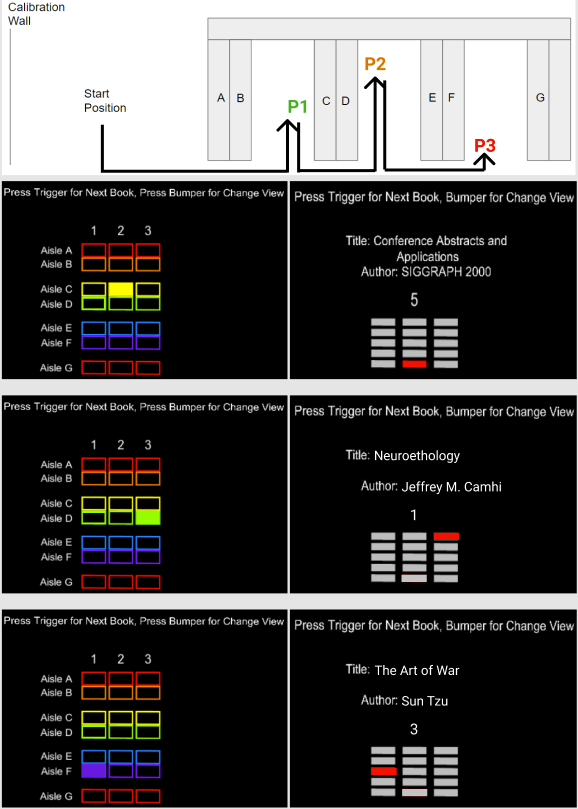

Realistic scale: 7 shelving units (A-G) with 6.5ft spacing, mimicking warehouse layouts

Standardized materials: 525 books with 100 pickable items, consistent visual complexity

Optimized paths: Traveling Salesman Problem (TSP) algorithm ensured consistent pick path lengths

Screen-stabilized UI: Fixed-position interfaces with consistent proportions across devices

Dual-view system: Environment view for navigation, shelf view for precise picking

Standardized control: Unified input method across all HWD conditions

Used Held-Karp algorithm with Dijkstra's optimization to generate TSP tours ensuring all pick paths had equivalent difficulty and travel distance. This controlled for spatial cognitive load, isolating the effect of display variables on performance.

Phase 1: Display Positioning Study

Objective

Determine optimal interface positioning within Magic Leap One's visual field to minimize task interference and cognitive load during sparse picking tasks.

Experimental Design

Participants: 12 volunteers (ages 19-27, 7 female, 8 first-time pickers, all right-eye dominant)

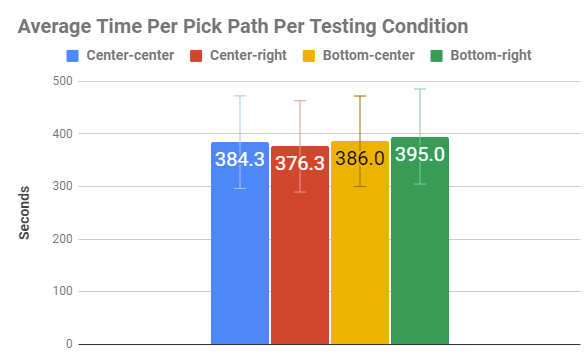

Conditions: 4 display positions tested - center-center, center-right, bottom-center, bottom-right

Protocol: 20 training + 20 testing pick paths (5 per position), counterbalanced Latin square design

Performance Metrics & Analysis

Quantitative measures: Task completion time, picking accuracy, NASA-TLX workload scores

Statistical analysis: Repeated measures ANOVA with Greenhouse-Geisser correction, pairwise t-tests with Benjamini-Hochberg adjustment

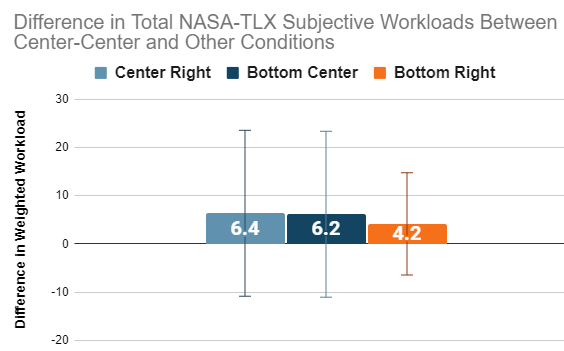

Key Statistical Findings

Accuracy differences: Center-center significantly outperformed bottom-right (T = -4.190, p = 0.006) and center-right (T = -2.600, p = 0.0375)

User preferences: Center-center rated significantly better than bottom-right for speed (Z = -2.067, p = 0.020), comfort (Z = -2.305, p = 0.011), and learnability

Surprising finding: No significant differences in task completion time between positions, suggesting stopping behavior masked timing advantages

Phase 2: Multi-Device Comparison Study

Objective

Compare three major HWDs against industry-standard paper pick lists to understand how device characteristics impact picking performance and user experience.

Device Specifications & Setup

| Device | Weight | FOV | Resolution | Frame Rate | Form Factor |

|---|---|---|---|---|---|

| Google Glass | 42g | 12°H × 8.3°V | 640×360 | 120 fps | Monocular, lightweight |

| Magic Leap One | 316g + 345g pack | ~30°H × 20°V | 1270×800/eye | 120 fps | Binocular, body-worn processor |

| HoloLens | 635g | ~34°H × 23°V | 1270×800/eye | 60 fps | Binocular, all-in-one |

| Paper | ~5g | Full field | N/A | N/A | Traditional pick list |

Participants: 12 volunteers (ages 19-23, 11 male, 10 first-time pickers, 9 right-eye dominant)

Standardized control: Twiddler3 wireless keyboard for consistent input across all HWD conditions

Performance Analysis

Statistical approach: Repeated measures ANOVA with Greenhouse-Geisser correction, one-tailed t-tests with Benjamini-Hochberg adjustment

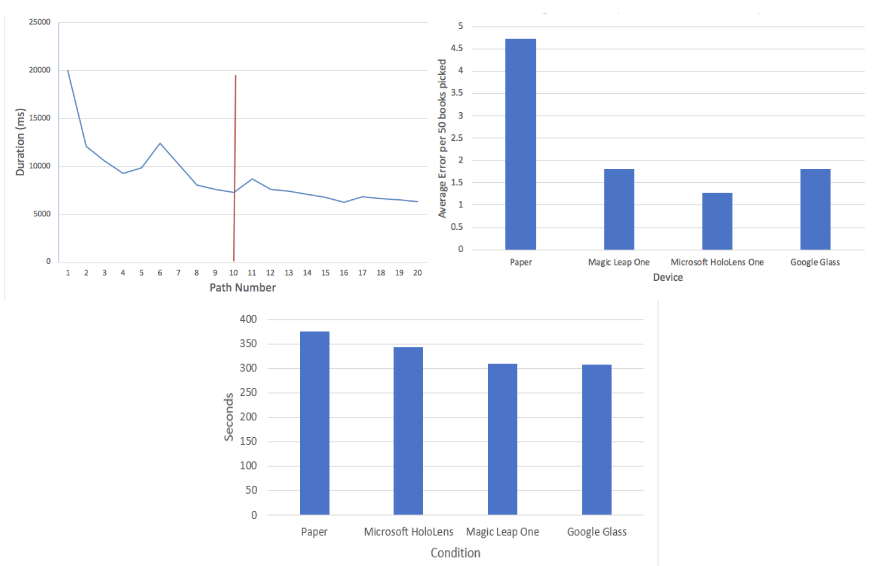

Training protocol: 20 training paths until performance plateaued (around path 10), then 20 testing paths

Key findings: Only Google Glass achieved significantly faster task times than paper. Only HoloLens achieved significantly better accuracy. Magic Leap and HoloLens rated significantly less comfortable than paper.

Key Findings

Center-center positioning achieved the best accuracy but created visual occlusion issues. Bottom-right had the worst performance due to frequent head movements.

- Statistical significance: Center-center vs bottom-right accuracy (T = -4.190, p = 0.006)

- User preference: Center-center rated significantly better for learnability across all non-center positions

- Unexpected finding: No significant time differences despite comfort variations

This suggests workers adapt their behavior (stopping to read) to compensate for poor display placement, masking timing differences while maintaining accuracy impacts.

Google Glass (42g) achieved the only significant speed improvement over paper, despite having the lowest resolution and smallest FOV among HWDs tested.

- Speed advantage: Glass significantly faster than paper (p = 0.04), while heavier devices showed no improvement

- Comfort impact: Magic Leap (661g total) and HoloLens (635g) rated significantly less comfortable than paper

- Movement restriction: Magic Leap's body-worn processor limited natural movement during picking

- Frame rate effects: HoloLens' 60fps caused motion-induced dizziness in participants

Results suggest that for mobile industrial tasks, ergonomic design may be more critical than display fidelity.

No single device excelled across all metrics, revealing distinct optimization strategies:

- Google Glass: Optimized for speed and comfort through lightweight design

- HoloLens: Optimized for accuracy through high FOV and detailed displays (fewer errors than paper)

- Magic Leap: Balanced approach with moderate performance across all metrics

- Trade-off patterns: Large FOV improved accuracy but increased eye strain and context switching time

This suggests deployment decisions should prioritize the most critical performance dimension for specific use cases rather than seeking general-purpose solutions.

Reflection

This research demonstrates that successful HWD deployment in industrial settings requires understanding complex interactions between human factors, device characteristics, and task demands. The findings challenge assumptions about display quality and reveal that ergonomic design is required for sustained use.