Context Aware Cooking Assistant

Designing a smart assistant to support continuous context switches in cooking by understanding user progress and environment through multimodal sensing

Deriving Design Needs for AI Cooking Assistants

Cooking involves constant context switching between understanding instructions and performing physical tasks in real-time. This research explored how to design multimodal cooking assistants that understand user progress, environmental context, and support natural interaction flows.

Research Demo Video

The Problem

Cooking assistance requires understanding context, not just controlling media

Following cooking videos requires users to constantly alternate attention between understanding video instructions and performing those steps in their physical environment. Current video navigation tools treat this as a simple media control problem, ignoring the rich contextual information available in the cooking environment.

Context switching creates cognitive overhead

Users must mentally track their progress, map video instructions to their specific setup, and troubleshoot differences between what they see on screen vs. their kitchen reality.

How to design effective systems

Many existing cooking assistants and research efforts focus on agents that only process video. However, with recent advances in intelligent multimodal agent systems, we can now envision agents that offer contextually relevant assistance. The challenge lies in how to design such systems effectively.

What I Did

Wizard-of-Oz Study Design

Designed and conducted a controlled study simulating AI-powered contextual assistance through human operators

Interaction Analysis

Analyzed 30+ hours of cooking sessions to understand query patterns, workflow alignment, and assistant needs

Design Framework

Derived design principles for context-aware assistants that extend beyond the cooking domain

User Study

Wizard-of-oz study setup: researcher could see participant's cooking environment while controlling shared video interface

Research Goal

How can we design context-aware assistants that support users as they switch focus between following procedural video instructions and performing cooking tasks in real-time?

- What types of contextual information enable more effective cooking assistance?

- How do users naturally interact with environment-aware assistants during hands-on tasks?

- What design patterns from cooking assistance can generalize to other procedural domains?

Video conference setup: Participants joined from home kitchens, researchers controlled shared video screen

Environmental awareness simulation: Researchers could see participant's cooking space and context in real-time

Natural interaction: Participants encouraged to ask any questions they would want from an ideal cooking assistant

Multi-stream recording: User video, audio, all verbal interactions, and environmental sounds

Recipe selection: Participants chose unfamiliar but interesting dishes from 5 options, selected own YouTube videos

Session structure: 1-hour cooking sessions with pre/post surveys and demographic data

Used wizard-of-oz methodology rather than full AI implementation to focus on interaction patterns and user needs without being constrained by current AI limitations. This allowed us to simulate ideal contextual awareness and study user expectations.

UX Analysis Framework

Open Coding & Thematic Analysis

Two researchers independently performed line-by-line open coding on session transcripts and survey responses using established thematic analysis methods. Initial themes included "asking to replay video near end of cooking step" and "interactions requiring multimodal sensing."

Interaction Categorization Framework

Systematically grouped all user interactions into distinct categories based on intent, context, and required assistant capabilities. Categories emerged from data rather than predetermined frameworks.

Interaction categorization framework derived from thematic analysis

User Persona Development

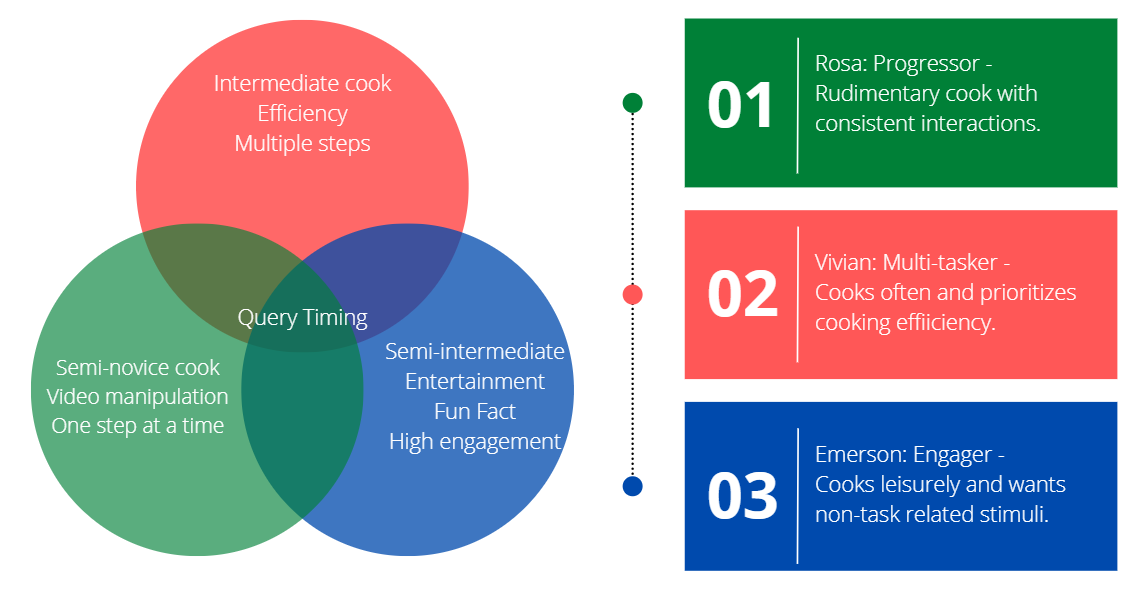

Used human-centered design methods to collaboratively build user personas based on cooking expertise, interaction patterns, and assistant usage preferences. Personas reached through researcher consensus and validation against participant data.

Data-driven user personas showing distinct interaction and assistance needs

Task Flow & Interaction Mapping

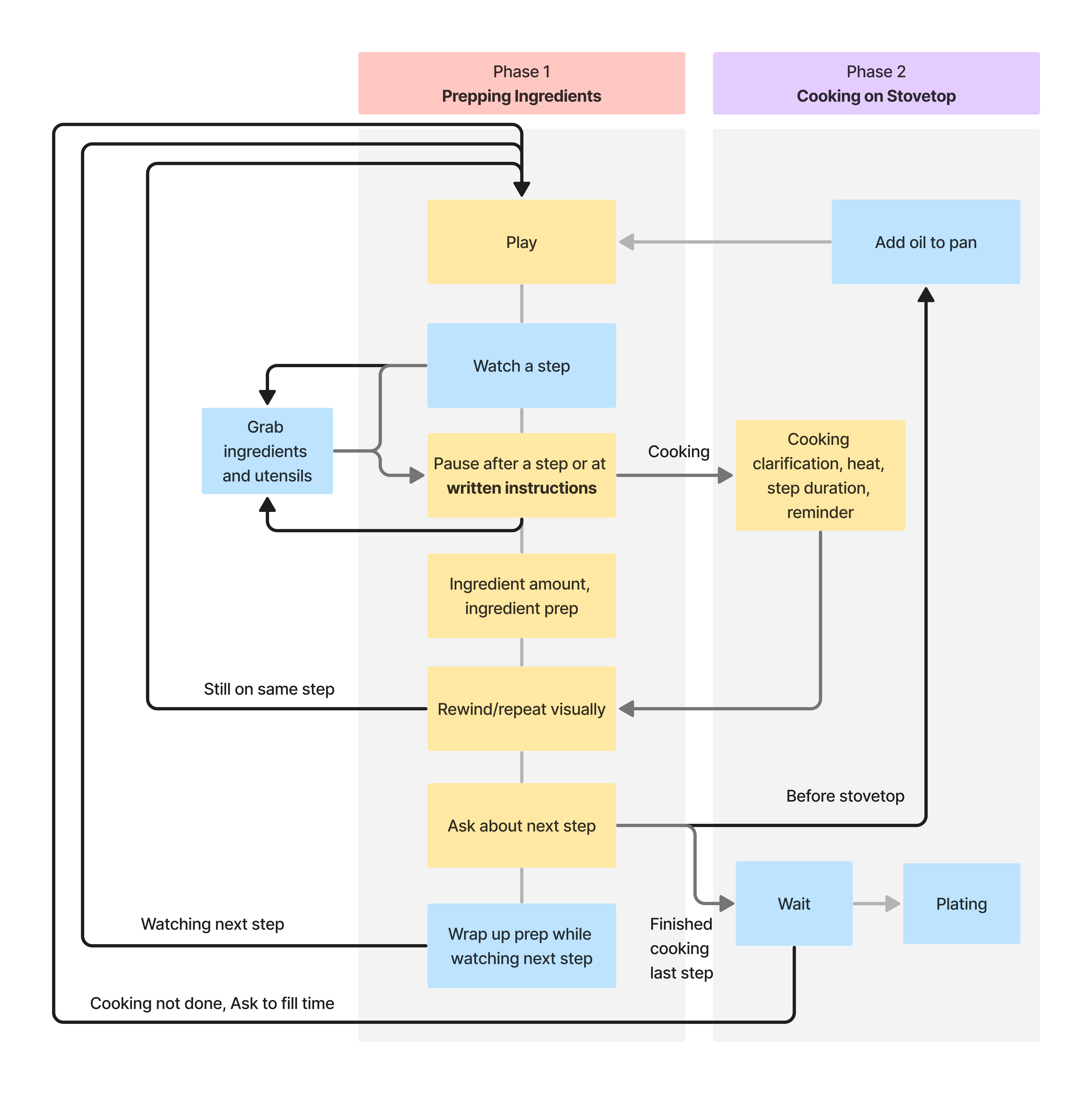

Derived typical cooking task flows and mapped where context switches and assistant interactions naturally occurred. Identified predictable patterns across different cooking styles and expertise levels.

Example cooking task flow highlighting natural context switch points and assistant intervention opportunities

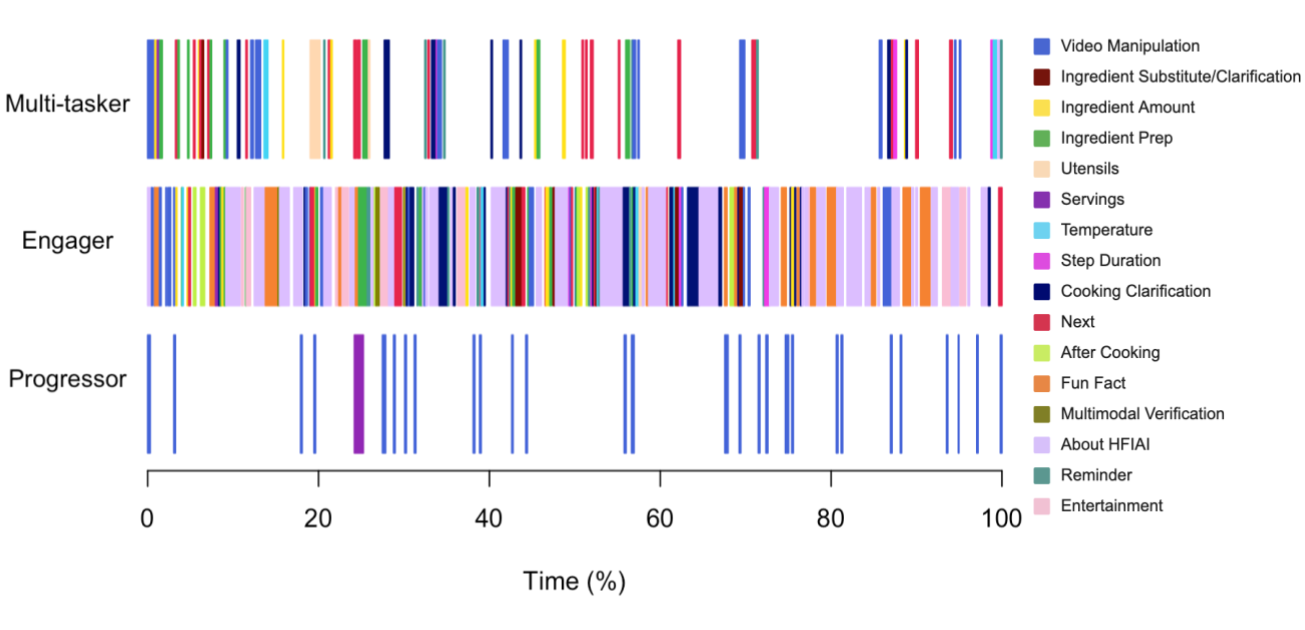

Persona-Based Interaction Analysis

Created comprehensive interaction profiles by mapping each participant's query patterns to derived personas, revealing distinct usage patterns and assistant needs across user types.

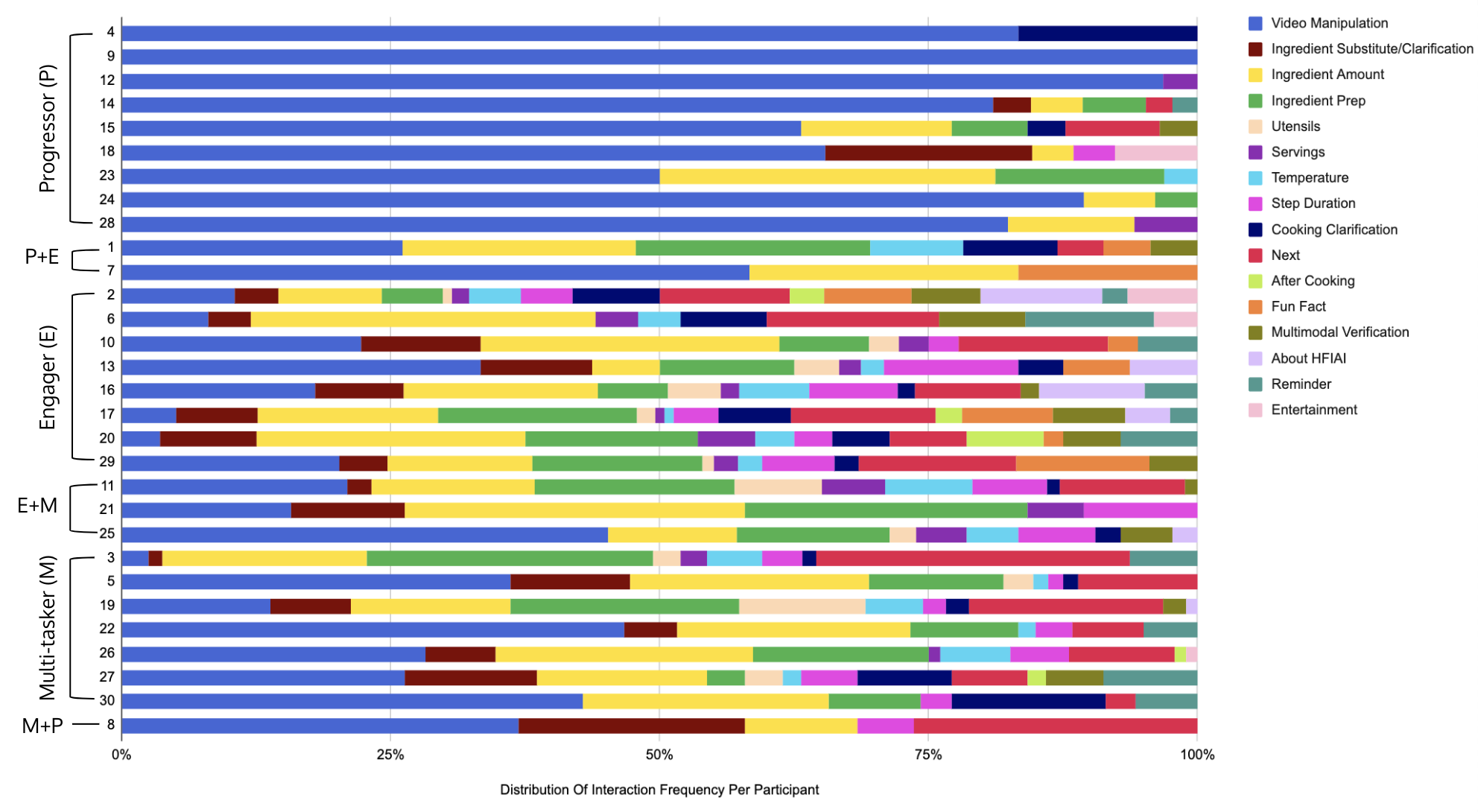

Bar chart analysis: participant interactions categorized and grouped by derived personas

Key Findings

Analysis revealed three primary query categories that emerged naturally during context-aware cooking assistance:

- Progress queries: "Did I add enough salt?" "Is this the right consistency?"

- Adaptation queries: "I don't have a stand mixer, what should I do?" "My pan is smaller than in the video"

- Timing queries: "How much longer should this simmer?" "When should I start the next step?"

These query types rarely occur with traditional video controls, suggesting environmental awareness unlocks new interaction possibilities.

While users had different interaction preferences and query patterns, their overall workflow structure showed surprising consistency:

- All users followed similar preparation → execution → validation cycles

- Context switches occurred at predictable points (ingredient prep, technique changes, timing decisions)

- Environmental queries clustered around decision points and skill-adaptation moments

This suggests context-aware systems can predict when assistance will be needed, even across diverse user preferences.

Environmental awareness allowed the system to anticipate user needs before explicit requests:

- Computer vision detected when ingredients were missing or substituted

- Audio cues indicated technique struggles (e.g., inadequate whisking sounds)

- Sensor data revealed timing issues (oven preheating, pan temperature)

- Combined signals enabled contextually relevant suggestions at optimal moments

Users reported feeling "understood" by the system rather than simply "controlled," leading to higher task confidence and learning outcomes.

Reflection

This work demonstrates that effective AI assistance requires understanding not just user intent, but environmental context and task progression. The multimodal sensing approach and interaction patterns identified extend beyond cooking to any domain involving procedural guidance in physical environments.