Captioning on Glass

Exploring real-time captioning using head-worn displays like Google Glass to support d/Deaf and hard-of-hearing users in both stationary and mobile tasks.

Real-Time Captioning for Head-Worn Displays

Designing accessible captioning interfaces that support d/Deaf and hard-of-hearing users across static and dynamic environments using Google Glass and other head-worn display technologies.

Research Demo Video

The Problem

Current captioning solutions don't support natural interaction in dynamic environments

By 2050, the WHO estimates 1 in 10 people will have disabling hearing loss. While smartphone captioning has become more common, these solutions require users to look down at devices, creating barriers in mobile contexts where hands-free use and environmental awareness are essential for safety and social interaction.

Visual attention fragmentation disrupts task flow

Users must constantly shift focus between their environment, the person speaking, and caption displays on handheld devices, creating cognitive overhead and missing critical contextual information.

Mobile scenarios require hands-free accessibility

Navigation, workplace tasks, and social interactions demand solutions that don't occupy users' hands or require them to break eye contact with their environment and conversation partners.

What I Did

Prototype Development

Built cross-platform captioning prototypes for Google Glass and Vuzix Blade, optimizing for latency and readability

Comparative User Studies

Designed and conducted controlled experiments comparing HWD vs. smartphone captioning across stationary and mobile contexts

Interaction Design Analysis

Analyzed user behavior patterns, response times, and comfort metrics to derive design principles for accessible HWD interfaces

Experimental Design & Methodology

Real-time captioning interface prototype running on Google Glass Enterprise Edition

Research Hypothesis

Head-worn displays provide statistically significant improvements in response time, cognitive workload, and task performance compared to smartphone-based captioning in both stationary and mobile contexts.

- H₁: HWDs will demonstrate faster response times and lower cognitive workload in controlled tasks

- H₂: Mobile navigation scenarios will show greater performance advantages for HWDs vs. smartphones

- H₃: Subjective preference measures will favor HWD modalities across comfort and usability dimensions

Experimental design: Within-subjects comparison using balanced Latin square counterbalancing

Independent variables: Display modality (Google Glass EE2, Vuzix Blade, Razer Phone)

Dependent measures: NASA-TLX workload scores, task completion accuracy, head movement frequency

Controls: Noise-cancelling headphones, standardized instruction delivery, identical task complexity

Experimental design: Repeated measures with counterbalanced room navigation order

Task protocol: Multi-floor navigation with random name presentation (8-12s intervals)

Response metrics: Button-press reaction times, false positive rates, subjective workload

Environmental controls: Indoor lighting, standardized acoustic conditions, identical navigation distance

Microsoft Azure Cognitive Services Speech-to-Text API provided real-time transcription with custom post-processing pipeline. Cross-platform implementation ensured identical caption delivery timing across all devices.

Statistical Analysis Framework

NASA-TLX Workload Analysis

Conducted paired t-tests across six workload dimensions (mental, physical, temporal, performance, effort, frustration) with Bonferroni correction for multiple comparisons. Also calculated effect sizes (Cohen's d) and statistical power across workload dimensions. Achieved statistical significance for overall workload differences between HWD and smartphone conditions.

| NASA TLX | Glass vs. Phone (n=12) | Vuzix vs. Phone (n=8) |

|---|---|---|

| Overall | p=0.003*, 1−β=0.778, d=0.983 | p=0.016*, 1−β=0.614, d=0.967 |

| Mental | p=0.019* | p=0.015*, 1−β=0.748, d=1.156 |

| Physical | p=0.104 | p=0.035*, 1−β=0.610, d=0.961 |

| Effort | p=0.006*, 1−β=0.813*, d=1.034 | p=0.430 |

| Frustration | p=0.013* | p=0.306 |

Note: p < 0.05, 1−β < 0.8

Response Time Distribution Analysis

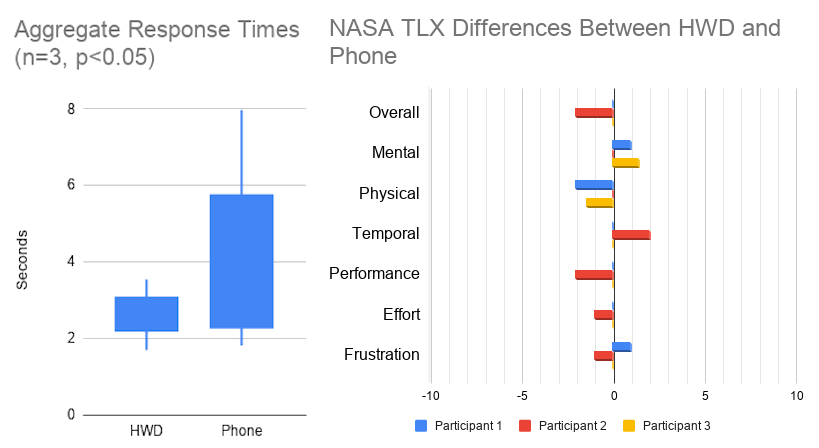

Used repeated measures ANOVA with device condition as within-subjects factor, controlling for learning effects through counterbalanced presentation order. Aggregate response times (left). Difference in TLX scores (negative indicates a lower HWD workload) (right).

Statistical Results & Findings

Paired t-tests revealed statistically significant differences in cognitive workload measures between HWD and smartphone conditions:

- Overall NASA-TLX: Google Glass vs. Phone (p=0.003, d=0.983, 1-β=0.778)

- Mental workload: Vuzix vs. Phone (p=0.015, d=1.156, 1-β=0.748)

- Effort reduction: Google Glass vs. Phone (p=0.006, d=1.034, 1-β=0.813)

- Physical workload: Vuzix vs. Phone (p=0.035, d=0.961, 1-β=0.610)

All significant results maintained statistical power above 0.60, with several exceeding the 0.80 threshold for adequate power.

Mobile name detection study demonstrated quantifiable performance advantages for HWD modalities:

- Response time improvement: HWD mean 2.7s vs. Phone mean 4.3s (p=0.033)

- Reduced variability: HWD σ=0.706s vs. Phone σ=2.222s (68% reduction in standard deviation)

- Comfort preference: Significant preference for HWD comfort (p<0.001)

- Consistent detection: Lower false negative rates in dynamic navigation contexts

The 1.63-second improvement represents a 37% reduction in reaction time with substantially improved consistency.

Systematic performance benchmarking revealed platform-dependent trade-offs affecting user outcomes:

- Speech recognition accuracy: Phone 96% > Vuzix 95% > Google Glass 86%

- Display clarity trade-offs: Glass clarity reduced eyestrain vs. Vuzix fuzzy display complaints

- Recognition error correlation: Glass frustration scores linked to 10% lower ASR accuracy

- Physical comfort factors: Weight distribution and thermal comfort affected sustained usage

Technical implementation choices directly impacted statistical outcomes, highlighting the importance of platform optimization.

Reflection

This project demonstrated the promise of head-worn displays for enabling accessible, real-time captioning in both stationary and mobile situations. While smartphones remain ubiquitous, HWDs offer hands-free, contextually integrated caption delivery that can improve social and task-related communication for DHH users. Future work may explore how to improve display readability, comfort for extended wear, and integration with modern ASR systems in noisy or outdoor environments.

References

2020

- Towards an Understanding of Real-time Captioning on Head-worn DisplaysIn 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services, 2020